How many times have you sent an article to a friend or family member because you knew they’d like it? What made you decide to press that “share” button? Maybe the article was really well written and the topic was relevant to the person’s interests. Maybe it was about something you were just talking about with them. Maybe it was just incredibly endearing like Tian Tian the Panda enjoying a snow day at Washington DC’s National Zoo – how could you not share that?

As people, our memory strikes to share content as a quick connector with friends and family. The big question is how does a machine know to send Aunt Sally the same article on cat cafe’s that you would send her? Well, that’s where machine learning comes in.

Side note: Am I the only one that’s overwhelmed by the amount of places we can share things to?

Let’s focus on recommending content that relates to the things your reader talks about online. Think of this like your friend sending you an article about cooking because you post about cooking a lot on Twitter, Facebook, Reddit, Instagram, and your Pinterest page is full of recipes.

Why recommend based on topic?

Demographic data is often incorrect

: Some people aren’t interested in the same things as other people in their demographic. For example, I’m an urban professional woman in my twenties. This means I am constantly being targeted with content about makeup, celebrities, and hairstyles. However, a scan through my Facebook or Twitter shows that I post about start-ups, sentiment analysis, the Middle East… topics I’m much more interested in reading about. I’m certainly an exception, but imagine being able to capture the ≈25% of users who don’t match up with their demographic.

For the sake of an example, let’s look at the movie Mean Girls. Cady (Lindsay Lohan) represents ≈25% of the demographic that watches CNN instead of E!. Targeting “The Plastics” with Sephora ads is a no brainer, but it’s wasted on girls in high school that fight consumerism.

New readers: When you get new users, it’s important to impress them immediately or you’ll lose them. If you don’t have any data yet on which articles they like, recommending content based on topic is a great place to start. Engaging new readers – it could make or break you.

Not enough similar viewers: If you don’t have a lot of viewers or a lot of viewers within a circle, it’s going to be tricky to recommend content based on what other people are reading.

The “Echo Chamber” effect: If you rely entirely on recommending content to users that is similar to the content viewed by other users, bad recommendations can be poisonous. If some readers are reading poor choices because they happen to be the best of what’s available to them, you’ll interpret this as interest and the cycle will continue.

Build a Topic Based Article Recommender

The Task: To recommend articles to someone based on their inferred interests from something they have said.

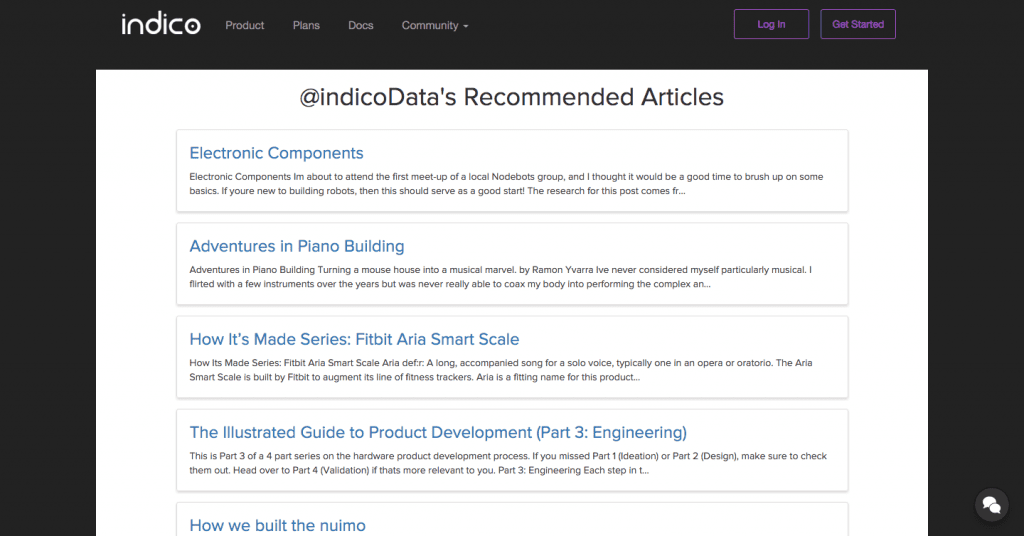

If topic analysis can help make better recommendations, how difficult is it to build a topic based recommender? Turns out, if you have access to a solid topic tagging API, you can do it in less than 100 lines of code. Check out my demo!

Getting Started

If you’d like to follow along and build the recommender yourself, clone my GitHub repository and check out the skeleton branch, which contains all the helper functions, but leaves the major functions stubbed for you to fill in as we go along:

git clone https://github.com/IndicoDataSolutions/content_recommendation.git git checkout skeleton

For my dataset, I’ve used a selection of articles from a popular social journalism website. I’ve saved my data as list of dictionary objects. For your dataset, I recommend compiling about about 1000 articles, for a high quality recommender.

Format your data like this and save it to a file called articles.ndjson in the root of the project:

[{

title: “Title of the article 1”,

content: “Body text of the article 1”

}, {

title: “Title of the article 2”,

content: “Body text of the article 2”

}]

I’ve provided you with a few helper functions which we’ll use for data manipulation:

load_data(filename) – loads JSON data from a file

dump_data(filename, data) – dumps JSON data to a file

batches(l, n) – yields successive n-sized batches from l

Once you’ve prepared your data, run the script to make sure everything is connected properly.

Get Setup with indico

In order to get set up, follow our Quickstart Guide. It will walk you through the process of getting and API key and installing the indicoio Python library. If you run into any problems, check the Installation section of the docs.

Assuming you’ve set up your account and done the necessary installations, as well as setting up a data file, you should be able to run the code on the master branch.

Part 1: Augment Articles with Text Tags to identify Topics

In order to save time when recommending articles, we’ll tag all of the articles in advance. In this section, we’ll add a list of the most relevant text tags to each article dictionary.

I’ve provided augment_data(), which loads up articles, batches them into groups of 100 to be analyzed, and writes the augmented articles to another file.

All we need to do is fill in add_indico_text_tags(batch), the function that takes in the batch of articles from augment_data() and returns the same batch, except with the text tag lists added. indico’s batch functionality allows us to process batches of samples at the same time, rather than sending each article in a separate request, which drastically expedites the process. indico recommends that users limit batch size to 100 samples for best performance, so we’ll consider 100 articles at a time.

First, we grab the content for each article in the batch and analyze the content. For each article in article_texts, we now have a corresponding dictionary in text_tags_dicts, which contains a mapping between 111 categories and their correlation to the article. By using a threshold of 0.1, we ignore tags that are not very correlated to the article.

article_texts = [article['content'] for article in batch] text_tags_dicts = indicoio.text_tags(article_texts, threshold=0.1)

Next, we iterate through the articles and add the corresponding text tags to each article:

for i in range(len(batch)):

text_tag_dict = text_tags_dicts[i]

batch[i]['text_tags'] = text_tag_dict.items()

The finished function looks like this:

def add_indico_text_tags(batch):

article_texts = [article['content'] for article in batch]

text_tags_dicts = indicoio.text_tags(article_texts, threshold=0.1)

for i in range(len(batch)):

text_tag_dict = text_tags_dicts[i]

batch[i]['text_tags'] = text_tag_dict.items()

return batch

Task 2: Choose which articles to show a user based on their statement

Now that we have tagged each article with the topics that are most related to it, we are ready to make select articles for a user based on something they have said.

I’ve written a function, recommend(user_statement), that outlines this process. We estimate the users interests based on what they’ve said. Then, we load up our articles, sort them by how close of a match they are to our users interests, and get rid of all except the ten best choices.

def recommend(user_statement):

interests = get_user_interests(user_statement)

data = load_data('indicoed_articles.ndjson')[0]

sorted_by_score = sorted(data, key=lambda x: score_by_tag_match(x, interests), reverse=True)

del sorted_by_score[10:]

return sorted_by_score

get_user_interests(user_statement), and score_by_tab_match(article, interests) are both stubbed, so we’ll fill them in.

We send the user’s statement through text tags to get a dictionary of tags that match the statement. Then we sort them and choose the top five. These tags represent our guess as to what the user is interested in based on what they are talking about.

def get_user_interests(statement):

tag_dict = indicoio.text_tags(statement, top_n=5)

sorted_tags = sorted(tag_dict.items(), key=lambda tup: -tup[1])

return sorted_tags

In the next function, score_by_tag_match(article, interests), we calculate the extent to which text tags of an article match the text tags of the users interests.

We iterate through both sets of tags (user interests and article topics), find matches, and multiply the importances. By summing these up we give priority to articles that match in more than one category, as well as giving priority to articles that are matched on more representative tags.

def score_by_tag_match(article, interests):

score = 0

for article_tag in article['text_tags']:

for interest_tag in interests:

if article_tag[0] == interest_tag[0]:

score += article_tag[1] * interest_tag[1]

return score

With these functions filled in, our code should match the master branch. Now you have an article recommender in less than 100 lines of code! Try putting in lots of different user statements and see what articles are recommended.

Take it to the Next Level

Stronger User Statements

: In my demo, I’ve integrated with twitter to grab the tweets for @indicoData and use these tweets as my user statement. By analyzing a bunch of tweets, rather than a short string to determine user interest, you’ll get a much more robust recommender. You’ll also be able to automatically grab user statements for any user you want. Other great sources of user statements include chat logs, reviews, emails, and Facebook profiles.

Beyond Text Tags: For simplicity, I limited this project to using only the Text Tags API. However, indico offers a wide variety of text APIs that are well suited for analyzing articles. Some other great APIs to try:

Political Sentiment – Recommend Articles with a similar Political Slant to a sample of text.

Sentiment – Recommend Articles with a similar positivity to a sample of text.

Keyword Extraction – Recommend Articles centered on keywords in a sample of text.

Relevance [Beta] – This beta offering determines how relevant query terms and phrases are to a given document.

Text Features [Beta] – This beta offering converts a piece of text into an array of numbers that can be used to compare it with other pieces of text.

Technology has come a long way since the Netflix algorithm started recommending content in the late 2000s. It seemed as if only big money could solve these issues and only Data Scientists with PhDs could ever create something this robust. Now, with indico, the power is in your own hands.

Need to analyze a large amount of data? Email us at contact@indico.io. We’re always here to help.